Do your past papers, my physics teacher says. And I do - I did a 2018 Paper 1 for revision, and got an A, nearly an A*, on it. But that made me wonder how ChatGPT would do if it had to sit the exam.

If you want to follow along with the exam, click on the link above to read through the paper. Maybe even try it and see if you can beat the AI!

Many people have already discussed ChatGPT's tendency to not know how many r's are in the word strawberry. Despite OpenAI leader Sam Altman's claims, I find GPT 4o, the current model, to not be that good at maths - at least not calculus, which is kind of understandable. Except it's not - WolframAlpha is very capable at it. Now these are different types of AI model - one is an LLM, the other may as well be a calculator, so it's like comparing apples and strawberries - yet I still think it shows the potential ChatGPT may have to improve.

However, ChatGPT has the knowledge of countless scraped articles to support it, and as such I was confident it would do well. I asked my friends in a poll how they thought it would do, and even if some didn't vote, and some who did voted for the intentionally surreal choices (which acted as controls), those who did firmly put it in the 70-79 mark range.

I suppose this will only make sense if you do A Level physics with the Edexcel exam board. Their Paper 1 exams test one's knowledge on mechanics, angular velocity, electricity, electric fields, particle physics, and magnetism. The test is out of ninety marks. Grading goes from A* at the top to E and then U at the bottom. An A* in 2018 was only about 70%, for context.

It took a while to do this examining, because ChatGPT has a limit on uploading photos to a chat, and it thus takes time to go through all of them. In the end, the AI got 69/90, or comfortably an A*. There are a few things to remember from this result, though:

- This was one exam paper from one exam board, aimed at a group of young adults, so doesn't brilliantly reflect how good ChatGPT is at physics;

- ChatGPT wasn't necessarily wrong at times, it merely said things which weren't on the mark scheme - so I suppose you could say ChatGPT would be bad at taking British exams, which is an entirely different topic of discussion;

- I didn't put the exam up against other LLMs like Gemini or Claude, mainly because I didn't intend this to be in depth research or a competition. I merely chose ChatGPT because it's the LLM I use the most (and I've done so for over a year now, so I'm also very familiar with it).

I suppose I should also explain the format of the exam before I discuss its faults: the first ten questions are all multiple choice, then you have eight extended response questions. Those final eight are by far the most crucial, it should go without saying.

ChatGPT coped well with the multiple choice, getting all but one question right. That question asked "What of the following are the base units of impulse?", and GPT's limitations became clear here. First of all, it said two options were correct - that's impossible in a multiple choice question, at least when you have a rigid mark scheme to go with it. Secondly, it didn't interpret base units correctly. In the context of the exam, it's rather clear they mean SI base units, or the seven fundamental units like the metre, kilogram, second, etc. ChatGPT however went straight to units, and so thought A and D were correct, when it was only D.

ChatGPT's limitations with maths were also routinely exposed. In question 11a, when asked to calculate the resistivity, it made an error where it somehow ended up three orders of magnitude out despite all the calculations being correct up to the final innings. A similar issue meant it calculated the efficiency of question 13b wrong by an order of magnitude despite the correct working out. I reckon the issue is probably that ChatGPT's code likely had a mathematical issue which meant it randomly misinterpreted a number when giving an output, yet I'm not too sure. There was also the time when it rounded 123.6 to 125 in question 15b, which I found odd as who rounds to the nearest five?

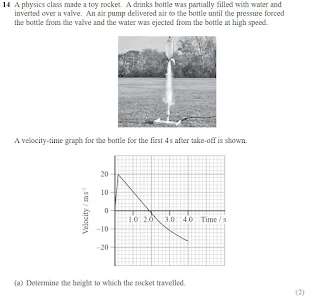

There was also question 14, where it got 0 marks. The main problem here was that ChatGPT, when it saw this graph, immediately interpreted it as a triangle yet got the measurements wrong. If you want the height to which the bottle travelled, you need the area under the graph to the point when the bottle's velocity was zero, and thus when the bottle begins moving towards the ground again. ChatGPT interpreted four seconds as the time when the bottle started doing this, which is a bit logical as this is when the graph stops taking new readings - yet this is irrelevant in the context of the maximum height.

The last question I want to discuss, however, was 15a, which asked students to describe the standard model of particles. ChatGPT did okay, but made two costly absences: it didn't mention muons or antiparticles. Neither did I when I did the paper, admittedly; yet ChatGPT did mention W bosons and gluons, amongst other particles. This best reveals its thinking - even though I told it these were A Level questions, ChatGPT didn't suddenly take on the thinking of an eighteen year old, instead it answered the question as per the data it's been fed. Notably, 15a said "You should identify the fundamental particles", and it did - all seventeen. At A Level, you only study the quarks and leptons, and photons come up occasionally, but that's it, and the mark scheme was per this.

Ultimately, this reveals that whilst ChatGPT is rather competent at answering science questions, it's not necessarily the best due to several factors, mostly not understanding context, failing to do calculations all too accurately, and thinking too much.

Screenshot of exam paper belongs to Pearson Education

Comments

Post a Comment